James Bond films have a certain formula to them. It is more interesting when seen from the perspective of the villain.

He has long been adjacent to money and power, but craves more. Several years ago, he successfully escaped his low-on-the-ladder job at an existing institution. He built a base of power that is independent of institutions. From it, he successfully puppets any organization he needs to. He and his organization are from elsewhere, everywhere, all at once. They have no passport and fly no flags; these concepts are thoroughly beneath them. They move around frequently and are always where the plot requires them to be, exactly when it requires them to be there. No law constrains them. Governments scarcely exist in their universe. To the limited extent they come to any government’s attention, no effective action is taken. The villain rises to the heights of influence and power.

This continues for years.

Then we suddenly hear E minor major 9. We begin the film, telling the end of the story, mostly from Bond’s perspective. The villain is just another weirdo who dies at the climax in act three.

Life imitates art

For years I have used the phrase “Bond villain compliance strategy” to describe a common practice in the cryptocurrency industry.

In it, your operation is carefully based Far Away From Here. You are, critically, not like standard offshore finance, with a particular address in a particular country which just happens to be on the high-risk jurisdiction list. You are nowhere because you want to be everywhere. You tell any lie required to any party—government, bank, whatever—to get access to the banking rails and desirable counterparties located in rich countries with functioning governments. You abandon or evolve the lie a few years later after finally being caught in it.

Your users and counterparties understand it to be a lie the entire time, of course. You bragged about it on your site and explained it to adoring fans at conferences. You created guides to have your CS staff instruct users on how to use a VPN to evade your geofencing. The more clueful among your counterparties, who have competent lawyers and aspirations to continue making money in desirable jurisdictions, will come to describe your behavior as an “open secret” in the industry. They will claim you’ve turned over a new leaf given that the most current version of the lie only merely rhymes with the previous version of the lie.

And then we begin the third act.

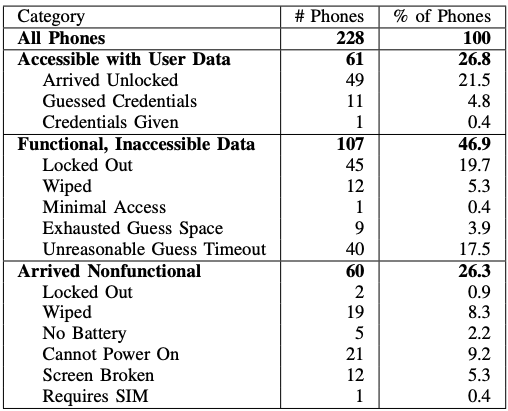

So anyhow, Binance and its CEO Changpeng Zhao (known nearly universally as CZ) have recently pleaded guilty to operating the world’s largest criminal conspiracy to launder money, paying more than $4 billion in fines. This settles a long-running investigation involving the DOJ, CFTC, FinCEN, and assorted other parts of the U.S. regulatory state. Importantly, it does not resolve the SEC’s parallel action.

How’d we get here?

A brief history of Binance

Binance is, for the moment, the world’s largest crypto exchange. Its scale is gobsmacking and places it approximately the 100th largest financial institution in the world by revenue. The primary way it makes money is exacting a rake on cryptocurrency gambling, in particular, leveraged bets using cryptocurrency futures. To maintain its ability to do this, it runs a worldwide money laundering operation with the ongoing, knowing, active participation of many other players in the crypto industry, including Bitfinex/Tether, the Justin Sun empire, and (until recent changes in management) FTX/Alameda.

In his twenties CZ worked in Japan (waves) and New York for contractors to the Tokyo Stock Exchange then Bloomberg. In about 2013 he got interested in crypto and then joined a few projects, including becoming CTO of OKCoin, another Bond villain exchange. Being a henchman is an odd job, so he decided to promote himself to full-fledged villain. In 2017 he did an unregistered securities offering (then commonly spelled “ICO”) for Binance.

Binance rose meteorically from then until recently, essentially gaining share at the expense of waning Bond villains. To oversimplify greatly, it carved up the less-regulated side of the crypto market with FTX, with Binance mostly taking customers in geopolitical adversaries of the U.S. (most notably greater China) and FTX mostly taking them in geopolitical allies (most notably, South Korea, Singapore, and the U.S.). But the cartels did not partition the globe in a way which fully insulated them from each other.

These operations were intertwined and coordinated. How intertwined? Binance was a part-owner of FTX until SBF decided successfully capturing U.S. regulators was a lot more likely if his cap table named more Californian trees and fewer Bond villains. How coordinated? The name of the Signal chat was Exchange Coordination.

This eventually led to grief as CZ (mostly accurately) perceived SBF was using the U.S. government as a weapon against Binance. He retaliated by strategic leaking, leading to a collapse in the value of FTX's exchange token, a run on the bank, and FTX's bankruptcy.

Where was Binance in all of this?

Binance did a heck of a lot of business in Japan in the early years. This officially ended in March 2018 when the Financial Services Agency, Japan’s major financial regulator, made it extremely clear that Binance was operating unlawfully in serving Japanese customers without registering in the then-relatively-new framework for virtual currency exchange businesses.

As an only-sometimes-following-crypto skeptic, this was the thing which brought Binance to my attention. Binance was piqued, saying that they had engaged the FSA in respectful conversations and then learned they were being kicked out of the country from a news report. Having spent roughly twenty years getting good at understanding how Japanese bureaucratic procedures typically work, I surmised “...that is a very plausible outcome if you start your getting-to-know-you chat with ‘Basically I am a James Bond villain.’” I think that was the first time the metaphor came to me.

The order expelling them listed their place of business as Hong Kong, with a dryly worded asterisk stating that this was taken from their statements on the Internet and “...there exists the possibility that [this information] is not accurate as of the present moment in time.”

That, Internets, is how a salaryman phrases “I am absolutely aware that you maintain a team and infrastructure in Japan.”

Did Binance exit Japan? Well, that depends. Did CZ personally return to Japan? Probably not. Does Binance continue to serve Japanese customers? Yes, though (Bond villain!) it pretends not to. Where does Binance’s exchange run as a software artifact? As a statement of engineering fact: in an AWS data center in Tokyo. ap-northeast-1, if you want to get technical.

(Someone needs to write an East Asian studies paper on how Tokyo became Switzerland for Asian crypto enthusiasts due to a combination of governance, network connectivity, latency, and geopolitical risk. I nominate anyone other than me.)

Binance also maintained an office in Shanghai, with many executives working there. It was raided by the Chinese police. Binance denied that the office existed. The spokesman’s quote was pure Bond villain: “The Binance team is a global movement consisting of people working in a decentralized manner wherever they are in the world. Binance has no fixed offices in Shanghai or China, so it makes no sense that police raided on any offices and shut them down.”

This was a lie wrapped around a tiny truth. Internet-distributed workforces containing many mobile professionals do not exactly resemble a single building with all your staff and your nameplate on the door.

Of course, on the actual substantive matter, it was a lie.

We know it was a lie, because (among many other reasons) we have the chat logs where the parts of their criminal conspiracy that operated in the U.S. complain that the parts of their criminal conspiracy that operated in Shanghai kept information from them that they needed to do their part to keep the crime operating smoothly. Coworkers, man.

Binance’s Chief Compliance Officer, one Samuel Lim, apparently is not a fan of The Wire and never encountered Stringer Bell’s dictum on the wisdom of keeping notes on a criminal conspiracy. He writes great copy, most memorably “[We are] operating as a fking unlicensed security exchange in the U.S. bro.” He and many other Binance employees have helpfully documented for posterity that their financial operations teams were, for most of corporate history, working from Shanghai.

Binance also operated in the state of Heisenbergian uncertainty, sometimes known as Malta. Malta has a substantial financial services industry, which welcomed Binance with open arms in 2018 and then pretended not to know him in 2020. This continues Malta’s proud tradition of strategic ambiguity as to whether it is an EU country or rentable skin suit for money launderers. ¿Por qué no los dos? Despite this, Binance would continue claiming to customers and other regulators for a while that it was fully authorized to do business by Malta.

Binance operates in Russia, to enable its twin businesses of cryptocurrency speculation and facilitating money laundering. In 2023 it pretended to sell its Russian operations.

Binance operated in many jurisdictions. The U.K.: kicked out. France: under investigation. Germany, the Netherlands, etc, etc, they required non-teams of non-employees at non-headquarters to keep track of all the places they weren’t registered doing their non-crimes.

A core cadre of the Binance executive team is currently in the United Arab Emirates, where CZ hopes to return. He professes that he will await sentencing there, and pinkie swears that he will totally get back on a plane to the U.S. to show up to it. For reasons which are understandable by anyone with more IQ than a plate of jello, the U.S. is skeptical he will make good on this promise, and is currently, as of Thanksgiving 2023, attempting to keep him in the U.S. He is physically present to sign what Binance advocates believe is the grand compromise to put all his legal worries behind them.

A defining characteristic of Bond villains is that they think they are very smart and everyone else is very stupid. To be fair, when you play back the movie of the last few years of their life, they keep winning and their adversaries look like nincompoops.

Then, they get extremely confident and begin to make poor life choices.

How did this work for so freaking long?

Much like the optimal amount of fraud is not zero, the global financial system institutionally tolerates (and actively enables) some shenanigans at the margin. You can think of Binance, Tether, FTX, and all the rest as talented amateurs capable of engaging the services of professionals. They followed advice and grew like a slime mold into the places where shenanigans are wink-and-a-nudge tolerated.

Why tolerate shenanigans? Some shenanigans are necessary to keep the world spinning.

China has grown into an economic superpower via capitalism while also at times officially having private property ownership be illegal. That circle cannot be squared. We, the global we, want Chinese people to not live in grinding poverty. That requires economic growth. Economic growth required making things the world wanted. Selling those things required integration into the global economic order. That required a willingness to ignore things the Communist dictatorship said were crimes, while simultaneously saying “Oh, bankers definitely, definitely shouldn’t facilitate billionaires committing crimes.”

As I’ve remarked previously, we similarly have complicated preferences with regards to Russian oligarchs. In some years, money laundering for them is, how might a gifted speaker phrase this, “[b]ringing our former adversaries, Russia and China, into the international system as open, prosperous and stable nations.” In other years, money laundering for them is described as funding Russia’s war machine.

Finance is messy because the world is messy.

Some of the shenanigans aren’t strictly necessary or planned, but society considers an expenditure of effort required to curtail them to be wasteful or to compromise our other goals. We had all the technology required to CC regulators on every banking transaction years before slow database enthusiasts decided all transactions would eventually be publicly readable and persisted forever. We simply chose not to implement it. It would have been quite expensive and infringed on the privacy of many ordinary people and firms.

But Binance, and others, forgot the critical step, to the annoyance of their engaged professionals: you have to eventually stop growing and keep to a low profile. You have to simply be content with being fantastically rich. If you do, you can continue showing up to the nicest parties in New York, owning expensive real estate in London, and commuting to a comfortable office in Hong Kong or the Bahamas or many other places.

But crypto kept growing until the control systems could not ignore them any longer. And the control systems cannot continue to avoid knowledge of the crimes.

So, so many crimes. Many of them are what crypto advocates consider as utterly inconsequential, like serially lying on paperwork. And also Binance gleefully and knowingly banked terrorists and child pornographers. That’s not an allegation; that has been confessed to. There is no line a Bond villain will not cross. They will cross them performatively.

And, surprising even me, some crypto characters consciously adopt the aesthetic of Bond villains. Le Chiffre, the villain in Casino Royale, owns a fictional house. That house exists in the physical world, where a location scout said “This certainly looks like the sort of place a Bond villain would live.” Jean Chalopin owned that literal, physical house. (c.f. Zeke Faux’s Number go Up, Kindle location 1175.) As previously discussed, Chalopin is a professional bagman, and his largest client was previously Tether.

What happens to Binance now?

Some believe that Binance admitting to being a criminal conspiracy is actually good news, not merely in the memetic “good news for Bitcoin” sense, but because this upper-bounds Binance’s exposure somewhere below “The United States forcibly dismantles the most important crypto exchange and much of the infrastructure it touches.”

The immediate consequences are about $4 billion in fines. Despite being one of the world’s largest hodlers, the U.S. will not accept payment in Bitcoin, and Binance has agreed to pay in installments over the next two years. CZ and Binance will be sentenced in February.

Some people think the grand bargain was to avoid him getting imprisoned. The actual text of the agreement says that Binance gets to walk away from some parts of it if he is sentenced to more than 18 months. (Senior officials told the NYT they are contemplating asking for more than that.)

Probably more consequentially, the settlements are going to force Binance to install so-called monitors internally. Those monitors are effectively external compliance consultants, working at the expense of Binance in a contractual relationship with them, but whose true customer is the United States. The monitors have pages upon pages of instructions as to exactly how they are to reform Binance’s culture by implementing recommendations to bring onboarding, KYC, and AML processes into compliance with the law everywhere Binance does business, and sure, that is part of the job.

But the other part of the job is that they’re an internal gateway to any information Binance has ever had, or will ever have. This can be queried essentially at will by law enforcement, with Binance waiving substantially all rights to not cooperate.

You might reasonably ask “Hey, doesn’t the U.S. typically require a warrant to go nosing about in the business of people who haven’t been accused of a crime?” And, to oversimplify half a century of jurisprudence, one loses one’s presumption of privacy if one brings a business into one’s private affairs. All of Binance’s customers and counterparties gave up their privacy to Binance by transacting with it. The U.S. has Binance’s permission to examine all of Binance’s historical, current, and future records, at will, for at least the next three years. It also secured a promise that Binance would assist in any investigation.

And so, if one were hypothetically not yet indicted by the U.S., but one had hypothetically done business with one’s now-confessed money launderer, one’s own Fourth Amendment protections do not protect the U.S. from hoovering up every conversation and transaction with Binance.

All of this is certainly good news and we can put this messy chapter behind us, say crypto advocates.

How are Bond villains actually regulated?

Was the Bond villain strategy ever going to work? Did Binance have a reasonable likelihood of prevailing on jurisdictional arguments, like telling the U.S. that the Binance mothership had no U.S. presence and so it should not be subject to U.S. law? No. Crikey, no. The system has to be robust to people lying or acting from less-salubrious jurisdictions, at least to the extent it cares about being effective, and at least some of the time it does actually care about being effective.

The U.S.’s point of view on the matter, elucidated at length in any indictment for financial crimes, is that if you have ever touched an electronic dollar, that dollar passed through New York, and therefore you’ve consented to the jurisdiction of the United States. Dollarization is very intentionally wielded like a club to accomplish the U.S.’s goals.

There exist some not-very-sympathetic people one could point to who ran afoul of this over the years who are still much more sympathetic than Binance. Binance intentionally used the U.S. market and infrastructure to make money. The U.S. was essential to their enterprise. Many peer nations can, and will, make a similar argument.

Binance had tens of percent of their book of business in the U.S. They were absolutely aware of this, knew that some of those users were their largest VIPs or otherwise important, and took steps to maximize for U.S. usage while denying they served Americans.

Their engineers didn’t accidentally copy the exchange onto AWS or deploy it to Tokyo by misclicking repeatedly. The crypto industry playbook for doing sales and marketing looks like everyone else’s playbook for doing sales and marketing. They get on planes, present at events, send mail, hire employees (or otherwise compensate agents), open offices, etc etc.

If having an email address meant you didn’t exist in physical reality anymore, the world would be almost empty.

CZ personally signed for bank accounts for some of his money laundering subsidiaries at U.S. banks, like Merit Peak and Sigma Chain. The SEC traced more than $500 million through one of those accounts.

One major rationale for KYC legislation, as discussed previously, is that it makes prosecuting Bond villains easier. Even if compliance departments at banks are utterly incompetent at detecting Bond villains at signup, having extracted the Bond villain’s signature on account opening documents is very useful to prosecutors a few years down the road. Why have to do hard work quantifying exactly how many engineers work on which days at Binance’s offices in San Francisco when you can do the easy thing and say “Hey, fax me the single piece of paper where the Bond villain signed up for responsibility for all the crimes, please.”

Why do Bond villains sign for bank accounts in highly regulated jurisdictions? Partly it is because of beneficial ownership KYC requirements to open bank accounts. Partly it is because finding loyal, trustworthy subordinates is very hard if you’re a Bond villain, and Bond villains (sensibly!) worry that if the only name on the paperwork is a henchman, eventually that henchman might say “You know, actually, I would like to withdraw the $500 million I have on deposit with you.”

(This is why Bond villains frequently have e.g. the mother of their children sign for bank accounts. Bond villains, again, think everyone else is stupid, and that no one will cotton onto this.)

A subgenre of challenges in people management for Bond villains: you have to hire experienced executives in the United States to run the U.S. fig leaf for your global criminal empire. The people you hire will, by nature, be experienced financial industry veterans who are extremely sophisticated and have access to good lawyers. This combination of attributes is the recipe for being the best in the world at filing whistleblower claims. I expect a few previously executives at Binance U.S. are eventually going to take home the most generous pay packages in the entire financial industry for a few years between 2018 and 2022.

To make this palatable to the American public, those whistleblower rewards are not courtesy of the taxpayer; they’re courtesy of money seized from previous Bond villains. A portion of Binance’s settlement(s) will go to pay the whistleblowers at the next Bond villain. It’s a circle of life.

News that will break shortly

Different regulators have differing ability to prosecute complex cases, but they basically all have the ability to read simple legal documents. That is one of the things they are best at doing.

Binance will suffer a wave of tag-along enforcement actions, in the U.S. and globally. Partly this will be for face saving; global peers of the U.S., which Binance has transacted billions of dollars in, will largely not want to signal “Oh we’re totes OK with money laundering for terrorists and child pornographers”, and so they’re going to essentially copy/paste the U.S. enforcement actions. They will then play pick-a-number with Binance’s new management team, who will immediately cave.

The earliest version of this is probably only weeks away, but Binance will deal with it for years.

More interestingly, and likely more expensively, the SEC is going to hit Binance like a ton of bricks. They were one of the few regulators which opted out of consolidating with the DOJ’s deal. They think they have Binance dead to rights (they do), and tactically speaking, the deal makes their life even easier. Binance has waived ability to contest some things the SEC will argue. The SEC can now proxy requests for evidence to Binance’s monitors through other federal agencies.

Binance has had the enthusiastic cooperation of many people who walk in light in addition to their co-conspirators who walk in shadow. Those people, lamentably inclusive of some in the tech industry who I feel a great deal of fellow-feeling for, are going to start cutting off access to Binance. Compliance departments at their corporate overlords, which were either entirely in the dark or willing to be persuaded that a new innovative industry required some amount of flexibility with regards to controls, are (today) having strongly worded conversations which direct people to lose Binance’s number.

Binance has pre-committed to helping with the efforts to cauterize them from the financial system. They also pre-committed to assisting in, specifically, the investigation of their sale of the Russia business. That investigation will conclude that the sale was a sham (a Bond villain lied?) designed to avoid sanctions enforcement. Ask your friends in national security Washington how well that is going to go over.

Binance is going to be slowly ground into a very fine paste.

Many crypto advocates believe the U.S. institutionally wants to see Binance reform into a compliant financial institution. They are delusional. The U.S. is already practicing their lines for the next press conference. This course of action allows them to deflate Binance gradually while minimizing collateral damage, which responsible regulators and law enforcement officials actually do care quite a bit about.

The U.S. is aware that many high-status institutions and individuals, which are within the U.S.’s circle of trust, actively collaborated with Binance. Most of them will escape serious censure.

A few examples will be made, especially in cases where it is easy to make an example, because the firm is no longer operating financial infrastructure. This will take ages to happen and be public but relatively quiet, insofar as senior U.S. regulators will not get on TV to make international headlines announcing it. It will be one of the stories quietly dribbled out on a Friday to the notice mostly of people who draft Powerpoint decks for Compliance presentations. If you want a flavor for these, join any financial firm and pay attention during the annual training; you’re stuck going to it, anyway.

You seem a little smug, Patrick

I’m not breaking out the Strategic Popcorn Reserves yet, but I will admit to a certain amount of schadenfreude here. The world was grossly disordered for many years. It has corrected a relatively small amount.

We are a nation of laws. I’d support reforming some of them; a lot of the AML/KYC regulatory apparatus harms individuals who have done no wrong. Much is not well-calibrated in terms of societal costs versus occasionally facilitating a Bond villain’s self-immolation.

However, in the interim, one cannot simply gleefully ignore the laws because the opportunity to do so allows you to become wealthy beyond the dreams of avarice. Even staunch crypto advocates looking at Binance’s conduct see some things they are not happy to be associated with. Not all of the crimes were victimless crimes.

There exists the possibility that there is some salvageable licit business in crypto. People enjoy gambling. But if you factor out the crime, the largest casino in the world is not that interesting a business relative to the one that Binance et al ran the last few years.

I do not know if we’ll ever have a world with this scale of crypto businesses without the crime. The crime was the product. An opportunity to transform global financial infrastructure was greatly overstated and has not come to pass. I do not expect this to change.